The term deep learning traces its roots back to the 1940s. Researchers Warren McCulloch and Walter Pitts created the first neural network model, inspired by the structure of the human brain. This early work laid the foundation for modern advancements.

In 1986, Rina Dechter formalized the term deep learning, emphasizing the importance of layers in neural networks. These layers enable progressive feature extraction, mimicking how the brain processes information. Early models used just one layer, but today’s architectures can have thousands.

The “depth” in deep learning refers to the number of layers in a network. More layers allow for better pattern recognition and hierarchical abstraction. This depth is what sets modern neural networks apart from their simpler predecessors.

What Is Deep Learning?

Deep learning represents a cutting-edge approach to artificial intelligence. It uses neural networks with multiple layers to process and interpret complex data. These layers enable the system to learn hierarchical representations, making it highly effective for tasks like image and speech recognition.

Definition and Core Concepts

At its core, deep learning is a subset of machine learning that relies on layered neural networks. Unlike traditional methods, it automates feature extraction, eliminating the need for manual engineering. This allows the system to identify patterns and relationships in data with minimal human intervention.

The process involves three main components: input layers, hidden layers, and output layers. Each layer processes information and passes it to the next, enabling the network to build abstract representations. Optimization functions adjust the weights of connections during training, improving accuracy over time.

How Deep Learning Differs from Traditional Machine Learning

Traditional machine learning relies on hand-crafted features and simpler models, often with one or two layers. In contrast, deep learning uses three or more layers, allowing it to handle more complex tasks. This depth enables better pattern recognition and hierarchical abstraction.

Another key difference is computational requirements. Deep learning demands large datasets and significant processing power, making it more resource-intensive. However, its ability to automate feature discovery and achieve higher accuracy makes it a preferred choice for advanced applications.

“Deep learning has revolutionized fields like image recognition, natural language processing, and autonomous systems.”

Real-world examples include AlphaGo, which outperformed classical chess algorithms, and advancements in medical imaging. These applications highlight the transformative potential of deep learning in solving complex problems.

Why Is Deep Learning Called “Deep”?

Layered architectures form the backbone of advanced machine learning systems. These structures, known as neural networks, rely on multiple layers to process and interpret data. The term “deep” refers to the number of these layers, which enable complex pattern recognition and hierarchical abstraction.

The Role of Layers in Neural Networks

Each layer in a neural network plays a specific role in processing information. The input layer receives raw data, while hidden layers extract higher-level features. For example, in image recognition, early layers detect edges, while deeper layers identify shapes and objects.

This stacking of layers allows the model to build abstract representations. Techniques like LSTM and residual connections address challenges like the vanishing gradient problem, ensuring efficient training even in deep architectures.

Understanding the Depth in Deep Learning

Depth in deep learning is measured by the number of layers in a neural network. Early systems, like the 1971 8-layer network, demonstrated the potential of depth. Today, architectures like ResNet-152 use over 150 layers to achieve state-of-the-art performance.

Academic consensus defines a network as “deep” if it has more than two layers. This depth enables the system to handle complex tasks, such as MNIST digit recognition, with greater accuracy than shallow models.

Research, like the 2017 Lu et al. study, highlights the relationship between width and depth in ReLU networks. These advancements continue to push the boundaries of what deep learning can achieve.

The Evolution of Deep Learning

The journey of deep learning spans decades, marked by groundbreaking discoveries. From its early roots in the 1940s to today’s advanced applications, this field has transformed how we approach artificial intelligence. Let’s explore its evolution through key developments and milestones.

Early Developments in Neural Networks

In 1965, Ivakhnenko and Lapa created the first deep learning algorithm using polynomial activation functions. This laid the groundwork for future neural networks. By 1979, Fukushima introduced the Neocognitron, a precursor to modern convolutional neural networks (CNNs).

These early models were inspired by the brain’s structure, mimicking how neurons process information. Despite limited computational power, they demonstrated the potential of layered architectures.

Key Milestones in Deep Learning History

The history of deep learning is filled with pivotal moments. Here are some of the most significant breakthroughs:

- 1986: The introduction of backpropagation revolutionized training methods for neural networks.

- 1997: LSTM networks addressed challenges in sequential data processing.

- 2012: AlexNet’s GPU-accelerated training set new standards in image recognition.

Hardware also played a crucial role. From 1967 FORTRAN implementations to modern TPUs, advancements in computing power enabled deeper and more complex models.

Despite progress, the field faced setbacks during two AI winters (1974-80 and 1987-93). These periods of reduced funding and interest slowed research but ultimately led to stronger foundations.

Today, deep learning continues to push boundaries. Innovations like neuromorphic computing and brain-inspired architectures promise even greater advancements in the future.

Deep Learning vs. Machine Learning

Understanding the distinctions between deep learning and machine learning is essential for choosing the right approach. While both fall under the umbrella of artificial intelligence, they differ in complexity, data requirements, and computational needs. This section explores their key differences, similarities, and practical applications.

Key Differences and Similarities

Deep learning excels in handling unstructured data, such as images and audio, while traditional machine learning works better with structured datasets. For example, deep learning models like ResNet achieved human-level image recognition with a 5.1% error rate in 2015, a feat unmatched by simpler algorithms.

Another difference lies in computational costs. Deep learning often requires GPU clusters for training, whereas machine learning can run efficiently on CPUs. However, both approaches share the goal of improving accuracy through iterative training.

When to Use Deep Learning Over Machine Learning

Choose deep learning for tasks involving complex pattern recognition, such as real-time video analysis or natural language processing. For simpler tasks like fraud detection, traditional machine learning models like random forests are often more efficient and interpretable.

Hybrid approaches, such as Google’s BERT, combine the strengths of both methods. These models leverage the hierarchical feature extraction of deep learning while maintaining the transparency of machine learning.

“The choice between deep learning and machine learning depends on the complexity of the task and the available resources.”

By understanding these distinctions, you can make informed decisions about which approach best suits your needs.

How Deep Learning Works

Neural networks form the foundation of modern AI systems. These systems rely on layered architectures to process and interpret complex data. Each layer plays a specific role, from receiving input to producing the final output. Understanding this structure is key to grasping how deep learning achieves its remarkable results.

The Structure of Neural Networks

A neural network consists of three main components: input, hidden, and output layers. The input layer receives raw data, such as images or text. Hidden layers extract higher-level features, while the output layer provides the final prediction or classification.

Weight initialization strategies, like He and Xavier normalization, ensure efficient training. These methods help prevent issues like vanishing gradients, enabling the model to learn effectively.

The Process of Training Deep Learning Models

Training a neural network involves three phases: forward pass, loss calculation, and backpropagation. During the forward pass, data flows through the layers to produce an output. The loss function measures the difference between the predicted and actual values.

Backpropagation, formalized in 1970, adjusts the weights to minimize this loss. Techniques like dropout and batch normalization prevent overfitting, ensuring the model generalizes well to new data.

Optimizers like Adam, RMSProp, and SGD with momentum fine-tune the training process. These algorithms balance speed and accuracy, making them essential for modern AI applications.

“Efficient training requires both advanced algorithms and powerful hardware.”

Hardware considerations, such as GPU memory and model parameter count, play a crucial role. Larger models demand more resources, but they also deliver superior performance in complex tasks.

Key Components of Deep Learning

At the heart of modern AI systems lies a powerful framework built on interconnected components. These elements work together to process complex data and deliver accurate results. Understanding these building blocks is essential for grasping how AI achieves its remarkable capabilities.

Neurons and Layers

Neurons are the basic units of a neural network, inspired by the biological neurons in the human brain. Each neuron receives input, processes it, and passes the output to the next layer. This structure allows the network to learn and make decisions.

Layers are groups of neurons that perform specific tasks. Common types include dense, convolutional, and recurrent layers. For example, convolutional layers excel at image recognition, while recurrent layers handle sequential data like text or speech.

Modern architectures can have millions of parameters, enabling them to tackle complex problems. Memory requirements vary by layer type, with Conv3D layers demanding more resources than LSTMs. Visualization techniques, such as feature maps in CNNs, help researchers understand how these layers process information.

Activation Functions and Their Importance

Activation functions determine how a neuron processes its input. They introduce non-linearity, allowing the network to learn complex patterns. ReLU (Rectified Linear Unit), introduced in 1969, is now used in 95% of models due to its efficiency.

Other functions, like Leaky ReLU and Swish, address issues like the “dead neuron” problem. Sigmoid functions, while historically significant, cause vanishing gradients in deeper networks, limiting their use beyond three layers.

“Choosing the right activation function is crucial for optimizing neural network performance.”

Proper initialization strategies, such as He and Xavier normalization, ensure efficient training. These methods prevent issues like vanishing gradients, enabling the model to learn effectively.

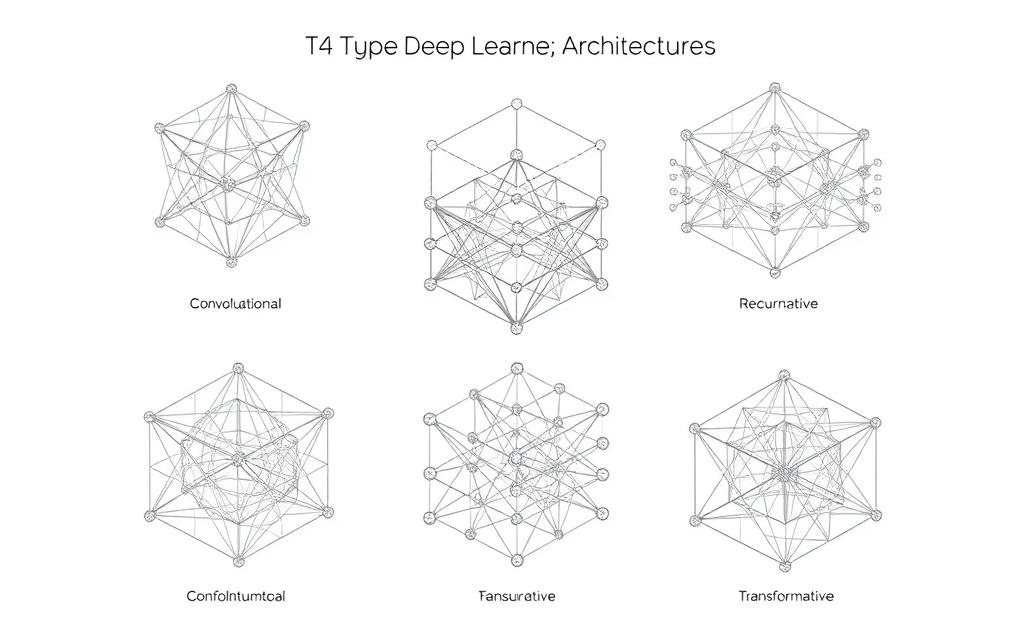

Types of Deep Learning Architectures

Modern AI systems rely on specialized architectures to solve complex problems. These frameworks are designed to handle specific tasks, from processing images to interpreting speech. Each architecture has unique strengths, making it suitable for different applications.

Convolutional Neural Networks (CNNs)

CNNs excel at processing visual data. They use convolutional layers to detect patterns in images, making them ideal for tasks like medical imaging and autonomous driving. For example, LeNet-5, introduced in 1998, processed 20% of US checks by 2003.

Key applications of CNNs include:

- Medical imaging (e.g., CheXNet for detecting diseases)

- Autonomous vehicles for object detection

Recurrent Neural Networks (RNNs)

RNNs are designed for sequential data, such as text and speech. They process inputs in a step-by-step manner, making them suitable for tasks like language translation and voice recognition. Variants like LSTM (1997) and GRU (2014) address challenges in long-term dependency.

Transformers, introduced in 2017, revolutionized natural language processing by improving efficiency and accuracy.

Generative Adversarial Networks (GANs)

GANs consist of two competing models: a generator and a discriminator. This architecture is used to create realistic data, such as photorealistic images. StyleGAN3, released in 2023, produces lifelike human faces, showcasing the potential of GANs.

Notable breakthroughs include:

- BigGAN for high-resolution image generation

- DALL-E for creating images from text descriptions

“The choice of architecture depends on the task, with CNNs for images, RNNs for sequences, and GANs for generative tasks.”

Emerging architectures, like liquid neural networks and neurosymbolic AI, promise to push the boundaries of what AI can achieve. Hardware optimization, such as TPUs for CNNs and GPUs for RNNs, further enhances performance.

Applications of Deep Learning

From transforming industries to enhancing daily life, deep learning has become a cornerstone of modern technology. Its ability to process complex data and deliver accurate results has led to groundbreaking applications across various fields. Let’s explore some of the most impactful uses of this technology.

Image and Speech Recognition

Image recognition has seen remarkable advancements thanks to deep learning. Models like Mask R-CNN excel in object detection, enabling applications in medical imaging and autonomous driving. Similarly, speech recognition has evolved from 70% accuracy in 2010 to an impressive 98% today. Technologies like WaveNet (2016) and Whisper (2022) have set new standards in this field.

Natural Language Processing (NLP)

NLP has been revolutionized by deep learning architectures. From BERT in 2018 to GPT-4 in 2023, these models have transformed how machines understand and generate human language. They power tools like chatbots, translation services, and content creation platforms, making interactions with technology more seamless and intuitive.

Autonomous Vehicles and Robotics

Autonomous vehicles rely heavily on deep learning systems to process vast amounts of data. Waymo’s system, for instance, analyzes 1.8 million miles of driving data annually. In robotics, companies like Boston Dynamics use reinforcement learning to create machines that can perform complex tasks with precision and adaptability.

Other notable applications include:

- Medical advancements like DeepMind’s AlphaFold for protein folding

- Industrial uses such as predictive maintenance with LSTM networks

- Creative tools like Stable Diffusion for image generation

“The versatility of deep learning continues to push the boundaries of what technology can achieve.”

Deep Learning in Everyday Life

From smart homes to secure transactions, advanced technologies are reshaping daily routines. These systems are no longer confined to labs but are now integral to how people interact with the world. Whether it’s managing household tasks or safeguarding financial data, the impact of these innovations is profound.

Digital Assistants and Smart Devices

Voice-activated assistants like Alexa and Google Assistant have become household staples. In 2023, 73% of US homes relied on these systems for tasks like setting reminders, playing music, or controlling smart devices. Nest thermostats and Ring cameras are prime examples of how applications enhance convenience and security.

Streaming platforms like Netflix use these technologies to personalize recommendations, influencing 75% of viewer choices. This seamless integration of information processing into daily life highlights the versatility of modern networks.

Fraud Detection and Cybersecurity

Financial institutions are leveraging these advancements to combat fraud. PayPal’s detection system blocks over $4 billion in fraudulent transactions annually. By analyzing transaction data in real-time, it identifies anomalies and prevents unauthorized activities.

In cybersecurity, companies like Darktrace use these tools to detect enterprise threats. Similarly, Facebook employs automated applications to remove 97% of hate speech, ensuring safer online spaces. These innovations demonstrate the critical role of intelligent systems in protecting sensitive information.

The Role of Data in Deep Learning

Data serves as the backbone of modern AI systems, driving their ability to learn and adapt. Without high-quality data, even the most advanced algorithms struggle to deliver accurate results. From training data to feature extraction, every step relies on the availability and quality of information.

Importance of Big Data

Big data plays a crucial role in enhancing the performance of AI models. For instance, ImageNet, a widely used dataset, contains over 14 million labeled images across 20,000 categories. This vast amount of data allows models to learn intricate patterns and improve accuracy.

Data augmentation techniques, such as rotation and flipping, can increase the effective size of a dataset by up to 10 times. This is particularly useful when training data is limited. Synthetic data, like NVIDIA’s Omniverse for 3D training, further expands the possibilities.

Data Preprocessing and Feature Extraction

Before data can be used, it must undergo preprocessing. This includes handling missing values, removing outliers, and ensuring consistency. Cleaning pipelines are essential to maintain the integrity of the information.

Feature extraction is another critical step. While traditional methods rely on manual engineering, modern systems automate this process. This allows models to identify relevant features without human intervention, improving efficiency and accuracy.

Bias mitigation is also a key consideration. Ensuring diversity, equity, and inclusion in training data helps create fairer and more reliable models. Storage solutions for petabyte-scale datasets are equally important, enabling the handling of massive amounts of data.

Challenges in Deep Learning

Advanced AI systems face significant hurdles in achieving optimal performance. These challenges range from technical issues like overfitting to resource-intensive training processes. Addressing these obstacles is crucial for improving model accuracy and efficiency.

Overfitting and Underfitting

Overfitting occurs when a model performs well on training data but poorly on new data. This happens when the model learns noise instead of patterns. Solutions include early stopping, dropout, and weight decay.

Underfitting, on the other hand, results from insufficient learning. The model fails to capture the underlying trends in the data. Balancing these issues is key to achieving reliable results.

Computational Requirements and Resource Constraints

Training advanced algorithms like GPT-4 can cost over $100 million in computational resources. This highlights the need for efficient training methods and hardware optimization.

Model compression techniques can reduce size by up to 10x with minimal accuracy loss. This makes deployment on edge devices more feasible. However, energy consumption remains a concern, with large language models emitting 626,000 lbs of CO2 during training.

| Challenge | Solution |

|---|---|

| Overfitting | Early stopping, dropout, weight decay |

| Underfitting | Increase model complexity, more data |

| Hardware limitations | GPU memory optimization, TensorRT |

| Energy consumption | Carbon-aware scheduling |

Explainability is another challenge. Tools like LIME and SHAP help interpret black-box models, making them more transparent. Transfer learning offers tradeoffs, as domain adaptation can limit effectiveness. Edge deployment requires TensorRT optimizations for efficient performance.

Advancements in Deep Learning

Recent years have witnessed groundbreaking progress in AI technologies. These advancements are not only enhancing existing systems but also opening doors to new possibilities. From solving complex scientific problems to improving everyday life, the impact of these innovations is profound.

One of the most notable breakthroughs is AlphaFold, which in 2022 solved over 200 million protein structures. This achievement has revolutionized biological research, enabling scientists to understand diseases and develop treatments more effectively.

Recent Breakthroughs and Innovations

Transformer architectures have expanded beyond natural language processing to vision tasks. Models like Vision Transformers (ViT) are now setting new benchmarks in image recognition. These algorithms are proving to be versatile and highly efficient.

Neuromorphic chips, such as Intel’s Loihi 2, are another leap forward. They mimic the human brain’s structure, offering significant energy efficiency. This makes them ideal for edge computing and real-time applications.

Federated learning is gaining traction for its privacy-preserving approach. By training models on decentralized data, it ensures sensitive information remains secure. This method is particularly useful in healthcare and finance.

The Future of Deep Learning Technology

Quantum machine learning is on the horizon, with Google’s quantum supremacy experiments paving the way. These advancements promise to solve problems that are currently beyond classical computing capabilities.

Biological neural interfaces, like Neuralink, are exploring the integration of AI with the human brain. This could lead to breakthroughs in medical treatments and enhanced cognitive abilities.

Climate modeling is another area benefiting from AI. NVIDIA’s Earth-2 project aims to create a digital twin of the planet, enabling better predictions and solutions for environmental challenges.

| Advancement | Impact |

|---|---|

| AlphaFold | Revolutionized protein structure prediction |

| Vision Transformers | Improved image recognition benchmarks |

| Loihi 2 | Enhanced energy efficiency in neuromorphic computing |

| Federated Learning | Enabled privacy-preserving model training |

“The future of AI lies in its ability to solve humanity’s greatest challenges, from healthcare to climate change.”

As research continues, the synergy between AI and other technologies will drive further innovation. The potential for artificial general intelligence (AGI) remains a key focus, with organizations like Anthropic leading the way in safety and ethical considerations.

Deep Learning and Artificial Intelligence

Artificial intelligence continues to evolve, with deep learning playing a pivotal role in its advancement. As a subset of machine learning, deep learning leverages layered neural networks to process complex data. This approach has become a cornerstone of modern AI systems, driving innovations across industries.

How Deep Learning Fits into AI

Deep learning is a critical component of the broader artificial intelligence landscape. It accounts for 80% of AI patent filings, according to the 2023 WIPO report. This dominance highlights its importance in solving complex problems, from image recognition to natural language processing.

Within the AI hierarchy, deep learning sits as a subset of machine learning. It automates feature extraction, eliminating the need for manual engineering. This allows systems to identify patterns and relationships in data with minimal human intervention.

The Synergy Between Deep Learning and Other AI Technologies

Hybrid systems combine deep learning with symbolic reasoning to enhance performance. For example, IBM’s Watson integrates deep learning with rule-based algorithms to deliver accurate results in healthcare and finance. This synergy enables more robust and adaptable AI solutions.

Edge AI is another area where deep learning shines. TensorFlow Lite allows neural networks to run efficiently on IoT devices, bringing AI capabilities to the edge. This reduces latency and improves real-time decision-making.

Explainable AI (XAI) techniques, like layer-wise relevance propagation, make deep learning models more transparent. This is crucial for building trust in AI systems, especially in sensitive applications like healthcare and autonomous vehicles.

“The integration of deep learning with other AI technologies is driving unprecedented advancements in artificial intelligence.”

Swarm intelligence, enhanced by deep learning, is revolutionizing drone coordination. These systems can perform complex tasks with precision, from disaster response to agricultural monitoring. Ethical frameworks, such as the EU AI Act, ensure these technologies are developed responsibly.

Ethical Considerations in Deep Learning

Ethical challenges in AI demand immediate attention. As these technologies become more integrated into daily life, addressing issues like bias, fairness, and privacy is crucial. Ensuring ethical practices not only builds trust but also enhances the reliability of AI systems.

Bias and Fairness in AI Models

Bias in AI models can lead to unfair outcomes. For example, Amazon’s 2018 recruiting tool showed gender bias, favoring male candidates. This highlights the importance of diverse data and rigorous testing to ensure fairness.

Facial recognition systems often exhibit accuracy disparities across different demographics. Google’s model cards initiative promotes transparency by documenting model performance and limitations. Such efforts help mitigate bias and improve accountability.

Privacy Concerns and Data Security

Privacy is a major concern in AI development. Since 2018, GDPR fines have exceeded €4 billion, underscoring the need for robust data protection measures. Apple’s implementation of differential privacy in iOS ensures user information remains secure while enabling useful insights.

Deepfake regulation is another critical area. The EU’s proposed AI Liability Act aims to hold creators accountable for malicious use. Consent frameworks, especially in medical data usage, ensure that individuals retain control over their personal information.

Adversarial attacks pose a threat to AI systems. Robust training methods and algorithmic accountability, such as the right to explanation, are essential for building secure and trustworthy models.

“Ethical AI development requires a balance between innovation and responsibility.”

- Dataset bias: Addressing disparities in facial recognition accuracy.

- Model cards: Enhancing transparency in AI development.

- Differential privacy: Protecting user data in real-world applications.

- Deepfake regulation: Preventing misuse of generative technologies.

- Consent frameworks: Ensuring ethical use of sensitive data.

- Adversarial attacks: Strengthening model robustness.

- Right to explanation: Promoting algorithmic accountability.

Deep Learning in Research and Academia

The intersection of academia and industry is reshaping the future of AI. Universities and research institutions are driving innovation, while industry partnerships provide resources and real-world applications. This collaboration is accelerating advancements in algorithms and models.

Current Trends in Deep Learning Research

Open-source initiatives like PyTorch and TensorFlow dominate the landscape. These frameworks enable researchers to share and build upon each other’s work. Hugging Face’s contributions to dataset sharing have also fostered a collaborative environment.

Funding sources play a critical role. While NSF grants support academic projects, corporate sponsorships drive industry-focused research. This dual funding model ensures a diverse range of applications.

MIT’s 6-AI internship program is a prime example of talent development. It bridges the gap between academia and industry, preparing the next generation of AI experts.

Collaborations Between Industry and Academia

Partnerships like Stanford’s collaboration with hospitals are advancing medical AI. These initiatives leverage academic expertise and industry resources to solve real-world problems.

Patent landscapes reveal a mix of university and corporate ownership. This balance ensures that innovations benefit both sectors. Ethical review boards, such as IRBs for AI research, ensure responsible development.

The reproducibility crisis in machine learning is being addressed through checklists and standardized practices. These efforts enhance the reliability of published research.

“Collaboration between academia and industry is essential for driving AI innovation and ensuring its ethical application.”

- Open-source frameworks: PyTorch vs TensorFlow adoption

- Dataset sharing: Hugging Face’s community contributions

- Funding models: NSF grants vs corporate sponsorships

- Talent pipeline: MIT’s 6-AI internship program

- Patent ownership: University vs corporate landscapes

- Reproducibility: ML reproducibility checklist

- Ethical oversight: IRB for AI research

Conclusion

The evolution of artificial intelligence has been shaped by the rise of deep learning, a technology built on layered neural networks. From its beginnings in the 1940s to modern breakthroughs like ChatGPT, this field has transformed industries, enabling advanced applications in healthcare, finance, and beyond.

Despite its progress, challenges remain. Energy consumption and explainability are critical issues that need addressing. Innovations like neuromorphic computing and quantum AI are paving the way for the future, promising even greater advancements.

Responsible development is essential to ensure these technologies benefit society. Continued learning and collaboration will drive the next wave of innovation, making deep learning a cornerstone of tomorrow’s AI landscape.

FAQ

What is deep learning?

Deep learning is a subset of machine learning that uses neural networks with multiple layers to analyze complex data patterns. It excels in tasks like image recognition, speech processing, and natural language understanding.

How does deep learning differ from traditional machine learning?

Traditional machine learning relies on structured data and predefined features, while deep learning automatically extracts features from raw data using layered neural networks, making it more powerful for complex tasks.

Why is it called "deep" learning?

The term “deep” refers to the multiple layers in neural networks. These layers enable the system to learn hierarchical representations of data, enhancing its ability to solve intricate problems.

What are the key components of deep learning?

Key components include neurons, layers, activation functions, and training algorithms. These elements work together to process input data and generate accurate predictions.

What are some common deep learning architectures?

Popular architectures include Convolutional Neural Networks (CNNs) for image processing, Recurrent Neural Networks (RNNs) for sequential data, and Generative Adversarial Networks (GANs) for creating synthetic data.

What are the applications of deep learning?

Deep learning powers applications like image and speech recognition, natural language processing, autonomous vehicles, and fraud detection, transforming industries and everyday life.

What challenges does deep learning face?

Challenges include overfitting, underfitting, and high computational demands. Addressing these issues requires robust algorithms, large datasets, and advanced hardware.

How does deep learning fit into artificial intelligence?

Deep learning is a core component of AI, enabling systems to learn from data and perform tasks that mimic human intelligence, such as decision-making and pattern recognition.

What ethical concerns arise with deep learning?

Ethical concerns include bias in AI models, privacy issues, and data security. Ensuring fairness and transparency is crucial for responsible AI development.

What advancements are shaping the future of deep learning?

Recent breakthroughs include improved algorithms, better hardware, and innovative applications. These advancements are driving the technology toward more efficient and scalable solutions.